Product Effect and Metal Detection

May 24, 2021

A Culture of Food Safety with the Right People

June 24, 2021Calibration and Verification

In a metal detector audit, quality control personnel need to understand the difference between calibration and verification. Metal detectors are not calibratable. There is no standard to which a metal detector can be set. Of course, that might sound odd, because we use metal detectors to find a specified size of metal, whether it is ferrous, non-ferrous or stainless steel. If the right settings are chosen, we expect that the detector will locate any given size of metal. As an example, if we set it with one group of settings it should detect 3.0mm ferrous. Change the settings and you should be able to detect 1.5mm ferrous. We expect that altering the settings will change the size metal it is capable of detecting. A detector CAN be verified and validated. It can be tested to ensure it qualifies as meeting the standards set forth in a HACCP or other plan.

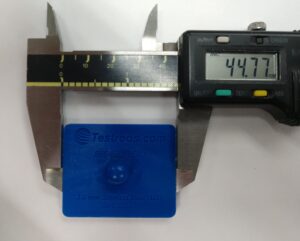

But it’s not like calibrating a scale. With a scale, you take a standard weight, perhaps 1 lb and adjust the scale so that it reads 1 lb. You might send out a scale or other device, say calipers, to a 3rd party vendor who would ensure that the device is calibrated, usually to an internationally accepted standard, and measures appropriately and consistently.

No Calibration Standard

A metal detector, however, has no international standard to which it can be “calibrated” (again, calibration and verification). There is a wide variety of variables that affect a detectors capabilities. Most important among those fluctuations is the product itself. Let’s take a brief look at several other factors, including:

- Orientation effect – Happens when the diameter of a wire contaminant is less than the spherical sensitivity in the test piece. Sensitivity is measured by the ball size because some contaminants, which are often wire, are more difficult to detect based on the direction of travel through the aperture.

- Aperture size and position – A contaminant close to the wall of the metal detector is easier to detect. The larger the aperture, the less sensitivity. For a consistent reading, products should pass directly through the center of the aperture.

- Packaging material – The material used to pack a product also affects sensitivity. Any metal in the packaging will affect the metal detector, reducing sensitivity and possibly creating a false metal signal.

- Environmental conditions – Plant vibrations and temperature fluctuations can affect the metal detector’s sensitivity.

- Product characteristics – Some products have certain characteristics that behave in the same way as metal when passing through the detector. For example, products with high moisture or salt content, such as meat and poultry, can often create a ‘false’ signal, making it difficult to distinguish the difference between metal and product.

- Process speed – This is not necessarily a limiting factor for conveyorized metal detectors where product passes through at a consistent speed, but performance is hindered when inspecting product passing through vertical metal detection systems pipelines because of the variations in the speed and flow of the product.

- Detector frequency – Metal detectors operate at different electromagnetic frequencies depending on the type of product being inspected.

Verification

While all these are important considerations, these are only some of the factors involved in verifying and validating a metal detector. All things being considered, the term calibration applies, ultimately, to the relationship between the metal detector and the product. Once the proper size aperture is available and each of these factors have been settled, a metal detector is “calibrated” with clean/non-contaminated product such that the product does not cause any effect on the metal detector. In simple terms, run a clean product through your detector and it shouldn’t reject the product. The “Product Effect” is being eliminated from the testing process.

Product effect is the magnetic and conductive properties of a product. As the product passes through the aperture, it will affect the coils used in the detection process. Metal detectors must factor this and eliminate it or ignore it. During setup, the detector needs to “learn” what is the product effect. The detector will discover this (along with the other factors above) and can then be set to a baseline. This is a setting in which the product (a clean product) and it’s container (paper, cardboard or other non-magnetic housing) move through the detector without setting off a detection alarm and the associated reject device.

When it comes to an audit, many people will ask an auditor to come in and “calibrate” their detectors. An auditor can assist with that, as explained, but that’s not what’s done during an audit. The auditors job is to “verify” that the metal detector can achieve the specifications (usually of a HACCP plan) that the quality control department needs it to achieve. An auditor should be able to discuss calibration and verification.

In a typical test:

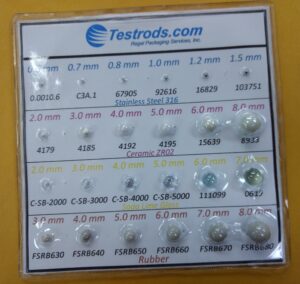

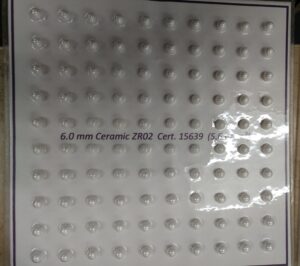

- The auditor will confirm that the customer’s test samples (shop here at Testrods.com) are clearly marked with size, composition, and certificate number or provide. Whenever possible, Detector Audits will supply certified test samples for testing.

- Unit will be monitored/observed with product to watch for signal changes and potential interference sources.

- Settings will be recorded as found at time of arrival, prior to making any changes to the unit.

- Test samples should travel through the approximate centerline of the aperture as this is the least sensitive area of the aperture.

- Samples should be placed within the product when possible.

- Testing of conveyor units should be tested on the product, preferably in the lead/center/trail position.

- Gravity units should be tested from point of product freefall.

- Testing of pipeline units should be tested through the center of the pipe.

- Test samples must activate the reject device to ensure the reject device is properly removing contaminated product from production. Verifying the reject device may include:

- Testing with contaminant at leading and trailing edge

- Running successive packs

- Attempting alternate packs

- Testing will be performed 3 times per test sample. A successful test consists of 3 (per test sample) consecutive detects.

- Changes to unit settings will be made, as necessary, to achieve successful testing. All changes will be recorded. These alterations should be made by plant personnel whenever possible.

Conclusion

So if the company (customer) has already established that they need to achieve 1.5mm Ferrous, 2.0mm Non-Ferrous and 3.0mm Stainless Steel, then the auditor, using a procedure outlined above, will “verify” that standard. If the product changes, the standards achievable could change, so the verification only applies to the products tested on that metal detection system. A change in the product will require that the detector be re-calibrated for that product and then the auditor can verify it meets the standards intended. Understanding the terminology means clarifying what you do on your production line and how it’s done. And in the end analysis, that makes for a safer product in the marketplace, so know the difference between calibration and verification.

2 Comments

need to do metal detector calibration, could you give me quote?

Thanks for your inquiry.

The words calibration and validation are sometimes used interchangeably. Technically speaking, the only “calibrating” a metal detector needs is to the product being tested. In other words, when clean, safe product is fed through the detector, it should not result in a reject.

Once that’s done, what we do is provide audit services as an outside vendor.

We validate for your food safety records that the metal detector is working and achieving the standards you indicate it will achieve (often written into a HACCP plan). If you say it will achieve (as purely an example) 2.0mm Ferrous, 2.5mm Non-Ferrous and 3.0mm Stainless Steel 316, we validate that is true and provide you with a report stating that. Most, if not all, food producers, packers and packaging plants are required to have this kind of certificate on file for a GFSI, BRC or other food safety audit.